What Does GPT Stand For In Chat GPT?

Have you ever wondered what “GPT” in Chat GPT means? Some people think it’s just a tech abbreviation, but it actually represents language understanding and artificial intelligence. Knowing what this acronym stands for helps explain the technology and its uses. In this article, we’ll clarify what GPT means and show how it drives the conversations you have every day.

GPT Stand For In Chat GPT

GPT stands for “Generative Pre-trained Transformer,” revealing its capabilities. “Generative” signifies the model’s ability to create new text rather than just repeat learned information. “Pre-trained” indicates it has learned from extensive internet text before use, enabling it to grasp language patterns and various writing styles. “Transformer” refers to a model architecture that excels in understanding language context by tracking word meanings within sentences. Collectively, these components portray a robust tool for conversation, answering inquiries, and generating creative content.

Who Created ChatGPT?

ChatGPT was developed by OpenAI, an artificial intelligence research organization founded in December 2015. The organization’s mission is to ensure that artificial intelligence benefits all of humanity.

OpenAI has grown significantly since its founding. By 2023, the company had several hundred employees working on a wide range of AI projects. One of OpenAI’s main goals is to create artificial intelligence that is both safe and useful.

The technology behind ChatGPT is based on a neural network architecture known as the Transformer. This model enables ChatGPT to generate human-like text based on the input it receives.

ChatGPT was launched in November 2022 and quickly gained global popularity. Within just a few months, it reached over 100 million users, making it one of the fastest-growing applications in history.

OpenAI continues to improve and update ChatGPT to make it more responsive, reliable, and informative. Ongoing research focuses on enhancing the model’s understanding and safety, ensuring it remains a valuable tool for users worldwide.

The Birth of GPT: A Brief Historical Context

The creation of GPT (Generative Pre-trained Transformer) traces back to the early 2010s, when rapid progress in natural language processing (NLP) and deep learning reshaped how machines handled language. Neural networks allowed computers to analyze and generate text with growing accuracy.

In 2018, OpenAI introduced the first GPT model, built on the transformer architecture, which processed language more efficiently than earlier designs. GPT’s key innovation was pre-training on massive text datasets, enabling it to learn general language patterns before being fine-tuned for specific tasks.

This approach marked a major breakthrough in AI, showing that machines could produce coherent, context-aware text. With later versions like GPT-2 and GPT-3, the models became more advanced, expanding applications in content creation, chatbots, and communication.

GPT-4 brought notable advancements in reasoning, accuracy, and safety. Some versions of this model could process both text and images, known as multimodal capabilities.

A new, faster version of GPT-4 was created to handle longer conversations efficiently while providing quicker responses. This update made the model even more reliable and better at understanding instructions.

OpenAI also introduced specialized models, like o1, which excel at deeper reasoning tasks. These models are particularly helpful for complex subjects such as math, coding, and logic.

Now, GPT-5 represents the next step in AI development. It shows significant improvements in reasoning and understanding. ChatGPT-5 can manage complex tasks better while keeping context in longer chats, offering responses that feel more human-like and trustworthy.

GPT’s evolution transformed NLP and sparked global discussions about AI ethics, safety, and the future of human–AI interaction.

Understanding the Acronym: Generative Pre-trained Transformer

Every component of this statement embodies an essential idea in contemporary AI and machine learning. Collectively, they outline the core framework and training methodology that drives ChatGPT and similar large language models.

“Generative”: The Power to Create

“Generative” simply means the ability to create new things. For ChatGPT, this means it can write text based on what you ask it to. Whether you want a story, an essay, or a poem, ChatGPT can do it all.

For example, if you type:

“The capital of France is”

What makes this really fun is its creativity. You can request it to write about anything, and it will generate original ideas. This open approach gives you endless options.

Additionally, it learns from each conversation, getting better at understanding what you want over time. So, the more you talk to it, the more helpful it becomes. Whether you’re using it for fun or serious tasks, ChatGPT can easily turn your ideas into reality.

It’s like having a brainstorming buddy that’s always ready to help. This creates a space for new ideas, allowing people and teams to explore fresh concepts together.

“Pre-trained”: Mastering Learning Before Fine-Tuning

Pre-training — Mastering Learning in ChatGPT

Pre-training is the first step in how ChatGPT becomes smart. During this phase, the model learns from vast amounts of text from books, articles, and websites. It picks up on patterns, grammar, and even the nuances of various topics. This broad knowledge helps create a strong foundation.

Fine-tuning — Polishing the Model for Real Use

Once the pre-training is done, we move to fine-tuning. This stage is like polishing a diamond. Here, the model is taught to focus on specific tasks or types of conversations. Fine-tuning helps make ChatGPT more useful in real-world situations, like answering questions or giving advice.

Think of pre-training as building a library filled with information. Fine-tuning is selecting the best books for a specific reader. Together, these processes allow ChatGPT to understand language and respond appropriately, making it versatile and effective.

“Transformer”: The Engine Behind the Intelligence

The Transformer is the special model that powers ChatGPT. It helps the system understand and create text that sounds natural and human-like.

It was first developed by Google researchers in 2017 in a paper called “Attention Is All You Need.” This design became the base for many modern AI models, including OpenAI’s GPT series.

Unlike older models that read words one at a time, the Transformer looks at all the words together. This makes it faster and better at understanding the meaning of whole sentences.

The Transformer uses attention mechanisms to focus on the most important words in a sentence. For example, in “The bird ate the worm because it was hungry,” it understands that “it” refers to “the bird.”

By learning from huge amounts of text, the Transformer picks up language patterns and context. This helps ChatGPT give answers that are clear, relevant, and easy to understand.

In short, the Transformer is the core of ChatGPT’s intelligence, allowing it to have smooth and meaningful conversations with people.

Why GPT Matters: The Power of Language Understanding

Language serves as the most potent means through which humans convey ideas and engage in thought. GPT models signify a significant advancement in enabling machines to comprehend and produce language almost as adeptly as humans do. Here’s why this is important:

Changing How We Communicate

GPT is a significant leap forward in how computers understand language. By recognizing complex patterns, it helps make conversations between people and machines clearer and more effective. This technology allows for smoother interactions, changing how we connect with online platforms.

Sparking Creativity

More than just chatting, GPT encourages creativity. It helps writers, artists, and content creators come up with new ideas or polish their thoughts. This ability opens doors for fresh innovations and enhances the creative journey.

Making Information More Accessible

GPT is vital in making information easier to reach. It can simplify complicated texts or translate languages, which helps those who find traditional communication challenging. This spreading out of knowledge empowers a wider audience.

Boosting Automation

In areas like customer service, GPT helps make things run more smoothly. Its ability to automate replies increases efficiency, allowing businesses to concentrate on more important tasks. This change not only saves time but also enhances the quality of service provided.

Grasping Context

One of the standout features of GPT is its understanding of context. It picks up on nuances and adjusts its responses based on the conversation. This capability leads to deeper and more meaningful exchanges.

Shaping Tomorrow

As GPT continues to develop, its influence on our lives will expand. Its uses are broad, ranging from education to entertainment. Embracing this technology can pave the way for smarter systems and better experiences for people worldwide.

In short, GPT is important because it uses language understanding to transform communication, creativity, and accessibility. It propels technological growth and creates connections between individuals and information.

How to Use ChatGPT?

ChatGPT is a helpful tool that you can use to have conversations and find information. To get started, simply type in your question or topic in the chat box. You can ask anything, from simple queries to more complex subjects. Once you send your message, ChatGPT will respond with answers or suggestions.

Visit the ChatGPT Website

To get started, head over to the official ChatGPT website at chat.openai.com. If you prefer using your phone, you can also download and use the ChatGPT mobile app.

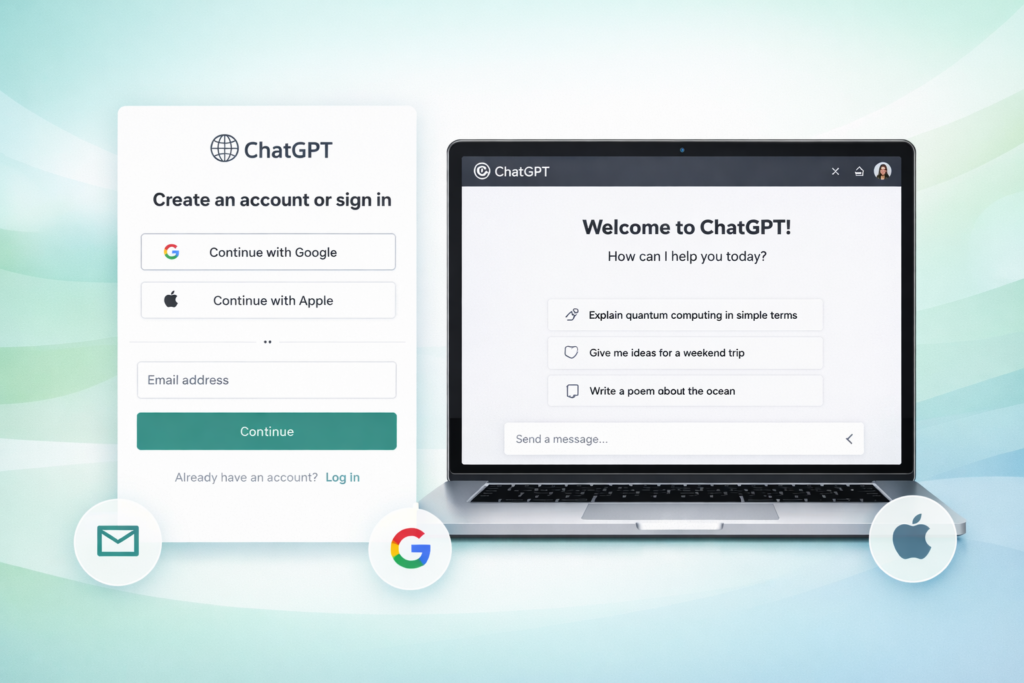

Create an Account or Sign In

Before using ChatGPT, you need to create an account or log in. You can sign up using your email address, Google account, or Apple account. Once logged in, you’ll find yourself on the main chat screen.

Start a New Conversation

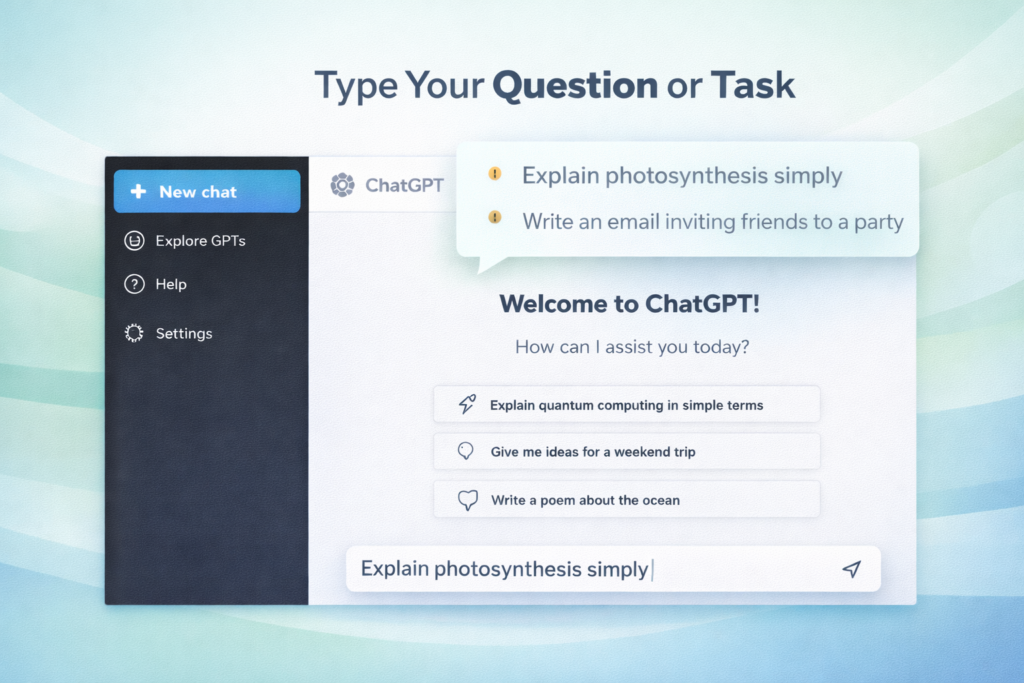

After signing in, look for the “New chat” button or simply open the chat window. This will display a text box at the bottom of the screen where you can type your question or request.

Type Your Question or Task

In the text box, write clearly what you need ChatGPT to do. Using specific language helps it understand better. For example, you might say, “Explain photosynthesis simply,” or “Write an email inviting friends to a party.”

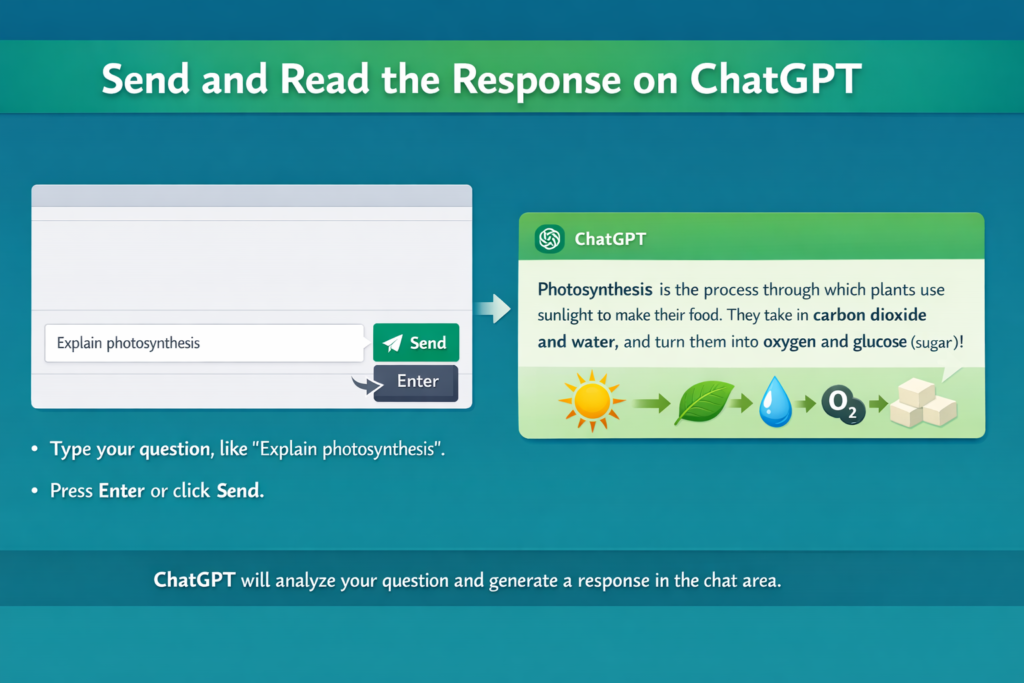

Send and Read the Response

Once you’ve entered your question, hit Enter or click “Send.” ChatGPT will analyze your input and generate a reply, which will appear in the chat area.

Continue the Conversation

Feel free to ask follow-up questions, seek clarification, or even request a different version of the answer. Just type your next question in the chat box.

Explore Advanced Features (Optional)

Depending on your version of ChatGPT, you may see additional features like image understanding, a study mode for educational assistance, or custom instructions to help tailor responses.

Provide Feedback on Answers

Beside each response, you’ll see thumbs up and down buttons. Click these to give feedback, which helps improve ChatGPT’s future answers.

Ethical and Social Implications of GPT

- Misinformation risks arise as GPT can generate realistic but false content, leading to mistrust.

- Privacy issues stem from the vast data used in training, often including sensitive information without consent.

- Bias in training data may amplify stereotypes, resulting in unfair treatment of marginalized groups.

- Job displacement could occur as automation advances, threatening employment across various sectors.

- Lastly, the lack of accountability when harmful content is generated complicates the resolution of negative impacts.

- Responsible development and regulation are crucial for balancing innovation, truth, privacy, and fairness.

CONCLUSION

The term “GPT” refers to Generative Pre-trained Transformer, which underpins contemporary conversational artificial intelligence. This technology merges language generation capabilities with extensive data learning and contextual understanding, all thanks to the Transformer framework. GPT has transformed the way people interact with computers, enabling fluid, smart, and user-friendly AI communication. As advancements continue, it is poised to influence the realms of creativity, education, and innovation across the globe.

FAQs

What does GPT stand for?

GPT stands for “Generative Pre-trained Transformer.”

What does “Generative” imply in GPT?

“Generative” means that the model can create new text based on the input it receives.

What does “Pre-trained” signify?

“Pre-trained” indicates that the model has been initially trained on a large dataset before being fine-tuned or deployed for specific tasks.

What is a “Transformer” in the context of GPT?

A “Transformer” is a type of neural network architecture designed for processing sequential data, especially useful for language tasks.

How is Chat GPT different from earlier models?

Chat GPT uses advanced transformer architecture and extensive pre-training, allowing for more context-aware and coherent responses.

Is GPT only used for chat applications?

No, while it’s popular in chat applications, GPT can also be used in content generation, translation, and summarization.